Disrupting the Visualisation of Reality

Interview with Neil Tocher, CTO, NCTech

The way we capture the reality is changing rapidly. One of the pioneers leading this change is NCTech, a Scotland-based company specialised in 360-degree reality imaging systems. The company works with renowned names such as Apple, Paramount and Pixar, and also with geospatial powerhouses like Leica, Topcon and Trimble. 'GIM International' talked with co-founder Neil Tocher about the strong ambition to enable everyone to capture reality and to democratise and disrupt the industry by making virtual reality the new standard for visualising our environment. A range of new applications is looming for the geospatial sector. Are we on the brink of a major transformation?

(By Wim van Wegen, content manager, GIM International)

Let’s start by taking a look at the geospatial industry. Traditional survey techniques are still widely used, but there are also new methods for mapping and surveying. Where do we currently stand?

You’re absolutely right. It’s not an industry that you want to change overnight; the traditional methods do have their value. The industry is all about accuracy, so we are trying to look at the workflow, how can we make things easier and more efficient. The industry of tomorrow will be one with an ‘image first, scan second’ approach, and we hope to push the market towards this. This means imaging will be much easier and faster to collect on site than Lidar, which is a very reliable method of measuring. Photogrammetry has been around a very long time, but you can get varying results and there’s a lot of known issues with it. We’re combining both: photogrammetry is the fast solution but we’re also backing that up with some basic Lidar to help the transition to faster and easier visualisation of reality. It’s all about the delivery as well – what you’re doing with that data. If it’s for visualisation, high-accuracy tools aren’t required; it can be done much faster with new tools that are designed for that process, which is where we’re heavily focusing on.

How is your company contributing to the industry’s transformation?

The transformation is about the workflow. By combining and introducing these new hardware solutions, we’re trying to gradually step over to a more photography-based technique rather than Lidar, hand in hand with our visualisation tools and backed up with the improvements that are happening in the cloud. People talk a lot about the cloud, but so far it’s mainly been somewhere to host your data for sharing. We’re really enhancing the power of the cloud with Intel and with Google, and we’re gearing up the processing power that we have to handle big datasets and produce much more accurate in-depth processes with the data that we’re collecting. So the accuracy is there and we can handle it; these new hardware tools plus new tools in the cloud deliver a whole new way of processing. We’re trying to move away from having all the data come back to the office to be processed on a powerful workstation, which can be very time-consuming. We’re splitting that processing up over multiple hardware devices in the field and those individual devices are pushing to the cloud – a more collaborative network of hardware, or the Internet of Things (IoT) as they call it these days. We’re bringing in other technologies as well, such as drones which we see as being a big part of what we do moving forward. We hope to bring these technologies into these industries seamlessly and also fully autonomously.

You mentioned unmanned aerial vehicles (UAVs or ‘drones’) – what role do they play in your company?

Drones are a bit like virtual reality (VR) – a buzzword that everyone is talking about but not really harnessing yet. Drones are being flown around above survey sites and the data is being uploaded to show you a bird’s-eye view, and possibly using photogrammetry and with Autodesk they sometimes create a 3D model – that’s great. But we’re working on new sensor technology with Intel that can be UAV-mounted, as well vehicle-mounted, as well as tripod-mounted. So we’ll have a whole new range of sensors to add to our armoury for pushing to the cloud, and drones are just another way of automating that collection service. One scenario could be on a construction site: if you want a regular daily or weekly scan on site to see how things are developing, drones could be setting off in the evenings and scanning when the site is quiet. So drones are just one more asset in the overall capture reality hardware.

You started in 2010 with the launch of your iStar camera. How has your company matured since then?

It’s growing – fairly steadily until recently. The iStar camera was originally developed for the police and for the military. When we entered into the engineering & construction (E&C) market the camera itself was capable to use that hardware but the workflow wasn’t, so we spent some time developing the software tools and integrations to make the camera fully integrated with the workflow and the current software. That lifted the barrier to market for the camera and it became very successful in E&C for colourising existing scan data. We’ve been working in other industries such as professional photography, we’re working with Google, and we’re about to embark on some large projects which include new hardware developments, the fruits of several of which we’ve announced recently: with the LASiris VR, the iris Pro and the iris VR. Another new product is our mobile mapping camera, a high-resolution camera that is vehicle-mounted for taking images as you drive. With all of these products we’re now doing 2D imaging and 3D depth imaging, but behind them all is our new cloud platform. Now that we have the complete package from end to end – from capture all the way through to delivery – this year it’s all about realising all those products and about gearing up for high volume, especially since we’re entering the consumer market with the iris VR, for which we’re working closely with Intel, Sony and Google. That gives us access to a broad array of customers, from consumers all the way up to construction. We’re very excited about pushing VR and the capabilities of these products and getting them into the hands of consumers. This will disrupt and democratise VR, it’s going to open up and empower the whole VR industry to be a lot more creative – which will be very beneficial for industries such as construction as well.

Virtual reality is hotly tipped as one of the potential disruptors in the industry. What are your expectations, and what do you regard as relevant application fields?

We believe it’s important to educate the industry. If it’s left to the big names out there it will be pushed down a certain route for a commercial reason, whatever that may be. We are a smaller company but we share the same vision as Google and Intel. We really want to get the best possible product we can into the consumer market, to empower people to capture their own reality that’s around them. It’s only when you can get reality capture at that scale that VR will really become a success. It’s kind of like YouTube: when everyone uploads their own content, it becomes unstoppable as a huge, reliable tool. We feel that if only the big names in the industry are doing it then it has a chance of failure – it will be exciting for the first year or so that people use it, but that content then becomes stagnant or less relevant to the user base. So we want to make sure that we let create their own content and hence minimise the chance of failure. This will benefit all the developers and all the hardware companies, whether for visualisation, for displaying VR, for all the associated accessories and so on – everyone is going to win from that. Behind this movement you need a very simple solution; as soon as you move to consumer it needs to be a one-button process to the cloud. NCTech is about all of this: straight-out-of-the-box tools that are doing an amazing job, with the whole process carried out automatically. If you want to use the data to do something very creative with it, you can. We don’t lock people in to proprietary systems, that would only be prohibitive to further developing new things.

Hybrid technologies are gaining ground, such as the combined collection and processing of imaging and Lidar data. Are you also involved in these developments?

Yes, all of our new products that are collecting depth are also collecting imaging at the same time. Whether it’s a static product on a tripod or a mobile product on a UAV or on a vehicle, they are generally collecting the RGB colour information as well as the depth information. This is an idea that we’re investing a lot of time and a lot of development work in. Whenever NCTech is mentioned, I expect most people think we are a hardware company. We’re actually a software company more so than a hardware one. The hardware is really developed to enable the software to deliver the output. When you buy a camera you don’t buy it for its look and feel, you buy a camera to take pictures; the image is the product that you’re buying into. It’s the same with our products – they are there for a reason, namely to capture data, and that data needs to be processed. So we put a huge investment into the processing of that data and making it available to everyone through an automatic pipeline. From the point of capture up to the cloud and in delivery, whether that’s to VR or simply a third-party application, we want to make sure that our software in the cloud handles colour image, scan data and everything else in between – videos and so on. Whereas the hardware development basically stops once you start shipping, the software development is a never-ending story.

Processing and visualisation of captured data are becoming more and more important. Which trends do you expect to succeed?

The visualisation of data really depends on the size of your data. Zooming in on the devices of today, everything is about the Internet of Things: multiple types of device communicating over the internet. In everything we do, it’s important to us that all of our products and services are available through the internet, to multiple platforms and devices – whether phones or computers – which means through the cloud and through WebGL for the user interface. No matter how complex or how big that dataset is, we’ve been working on ways of pulling that data down to satisfy and to maximise the potential of the device you’re looking at that data on. If you’re using a low-resolution screen, it will only provide you that resolution of data because that’s all you can see. But if you’re looking at it on your desktop computer, you will get a much higher-resolution download of that content. For us it’s all about the cloud and IoT – we feel that’s the future for data sharing. It’s not about a desktop computer and people sending large files to each other, it’s about streaming data almost on the fly. It’s about the collaboration of the devices out there doing the collection, the cloud doing the processing and people dialling in and pulling out what they need at that point in time. That’s the most efficient way forward for everyone.

You are pioneering in VR point clouds. Can you explain for us what VR point clouds are and why they are so relevant?

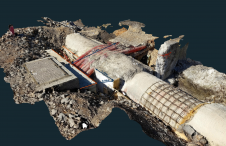

Point clouds in the E&C market are generally created by laser scanners and can be very large, dense files to download. If the files don’t have colour information they can be very hard to make sense of for a layman, and therefore you need an experienced user to understand how to manipulate these point clouds and to understand what you’re looking at. Adding colour to point clouds gives them a whole new dimension because human brains find it easier to understand colour. So colour helps you to make sense of a point cloud, but the file size is still huge. As an interface it can look like a poor photograph of the real world from a different view point, and as you go closer the points become sparse and disappear. So point clouds are great as a tool for measuring, but VR is about creating and taking a real-world place and virtualising it. Our view on point clouds is: we understand that high-end laser scanners can identify a wrinkle in a carpet, but do you need that level of accuracy for the visualisation of a space? The answer is no. You just need to know that the space has a carpet, and you need to know its size and dimensions, but you don’t need to know its texture or that there’s a wrinkle. Therefore you don’t need a high-density point cloud. Another issue is, whether you have a VR headset on or not, when you’re viewing a point cloud it still doesn’t look like a real image. It only looks real in an extremely high-resolution dense point cloud from a position that has no occlusion. As you move away from that point of origin and the occlusion kicks in and you see issues, it ruins your virtual reality experience. What you really want with VR is the ability to move around in the three-dimensional space freely, but also to take the point cloud information which is there behind the scenes. You want to use the colour photographic information and map that to simple geometry that’s been generated from the point clouds. The point cloud is there, but the user doesn’t see it; the geometry is also there, but the user doesn’t see it. What the user sees is a combination of both where the colour information has been mapped onto that simple geometry, giving a very lightweight model that can be moved around very rapidly. If the VR is to be viewed on a phone or a PC over an internet connection, it needs to be fast and lightweight. With VR, it’s also key that when the user turns their head the model can be moved at a rate that doesn’t given any nausea or have any slow latency. With large point clouds that’s a big problem: the download time of all the information will create a lag and then the user will become disorientated or feel ill. Therefore it’s important that with VR, with point clouds, we streamline that for the user experience rather than for the accuracy that point clouds give you for measurements and other details. It’s a trade-off: we have to simplify to improve the experience, but if you want to introduce measurements and other features into that VR experience we can still pull the high-resolution point cloud for the area of interest into that environment from behind the scenes so you can perform advanced measurements.

You’ve migrated your 3D visualising software ColourCloud to Google Cloud Platform, and Intel is also part of this alliance. Can you tell us a bit more about this?

Sure! As I already touched on, NCTech has a very strong and clear vision of future IoT technologies for reality capture including VR and augmented reality (AR). Google and Intel share that same vision and together we’re pushing to make the best products and experiences we can for everyone, from E&C to many other verticals. The whole point of our ‘colour cloud in the cloud’, as it were, is to enable whatever hardware product we bring to the market and to give those suites of tools we’ve developed to users without them having to understand exactly what is happening to the data they’re collecting, wherever they are. If the product needs to use our ColourCloud technology, then that process will just happen as a matter of course due to the way the data is pushed to our servers; the processing takes place without the user being aware of it. At the end of the day they get the amazing result they wanted. Using Google and Intel gives us the best-quality servers, the most powerful processing available, and maximum disruption and reach to the industry to ensure that these new products are a long-term success rather than just an overnight one. They can then continue to develop in conjunction with these large platforms. Our relationship with Intel is very strong: it’s not just at a software level or with their servers, it’s very much also on a hardware level. We’re developing new chips, new technologies, new drivers, a new workflow, all the way up to the cloud and delivery. It’s a very good alliance; those guys are very excited to be working with us, and the same holds true vice versa of course.

Which other major changes or disruptions do you foresee in the geomatics industry?

[Laughs] This is quite an interesting question, but there’s a limit to what I can disclose! Let me say this: we’ve been working on some new technology which we are very excited about, for the last two years. For example, we have recently released the LASiris VR, which was actually developed in 2015 but we sat on that product because the market wasn’t ready for it. We very much do foresee how the market should go, but we don’t just build it and then try to sell it because we think we should. We only do it when we know it’s going to succeed or when the market is ready for it. The market is now ready for the LASiris VR, there’s enough support out there for VR and the LASiris is perfect for high-end VR, hence it has been well received now. There are new technologies coming in the automotive industry, and also with drones as I’ve mentioned. We’re really excited about getting those new core technologies that we’ve developed for the high-end sector and E&C and precision down to the consumer level, because once it’s at consumer level it is then adopted everywhere. It becomes mainstream and then you can start to connect new verticals to each other through that new technology. What I’m personally looking forward to are things such as online shopping, which is an area we’ve had a vision on for many years already. Using reality capture hardware you can capture your own property/living space, there’s hardware out there to capture and scan objects, those objects are on our cloud platform and you can bring them into your own virtual reality world. With AR and VR together, it can give you a truly immersive shopping experience in your own home with accurate sizes, dimensions and so on. It adds a totally different dimension to the simple process of shopping. There’s some exciting news coming with regards to that, and we’re really looking forward to rolling out that type of vision. It’s good to realise that without adequate reality capture hardware, none of this will be possible. We need to be able to make that hardware disruptive and low-cost. Without that, it’s never going to be something that everyone is going to adopt and use day to day. That’s the big challenge we’re pushing to solve, and I think we’re on a good road to achieving that within the next couple of years.

Neil Tocher

Neil Tocher is a John Logie Baird award winner and has been a serial entrepreneur for over 22 years, working with large multinationals including Intel, Google, Apple, Adobe and Microsoft. Tocher has helped pioneers across many sectors from government and oil & gas to movie studios and media, developing leading-edge 360-degree 3D VR technology in both software and hardware. Tocher’s extensive commercial, technical, programming and product design experience combined with his passion for innovation drives NCTech forward as a global market leader in the field of reality imaging systems.

Value staying current with geomatics?

Stay on the map with our expertly curated newsletters.

We provide educational insights, industry updates, and inspiring stories to help you learn, grow, and reach your full potential in your field. Don't miss out - subscribe today and ensure you're always informed, educated, and inspired.

Choose your newsletter(s)