When photogrammetry meets Lidar: towards the airborne hybrid era

Combining airborne camera and Lidar sensors to take the mapping sector a step forwards

The airborne market for area-wide 3D data acquisition is experiencing a noticeable trend towards a hybrid mapping concept. In the near future, most airborne data collection will increasingly be performed by a combination of active and passive sensors. There are two main reasons for this. Firstly, collecting all relevant data while flying is an efficient and cost-effective solution, even when the flying restrictions and regulations are factored in. Secondly, exploiting the advantages of both light detection and ranging (Lidar) and dense image matching (DIM) point clouds will improve the quality of the final geospatial products. This article reports on the latest developments in the field of airborne hybrid systems, including both data acquisition and processing.

Almost all new generations of airborne Lidar systems integrate a Lidar unit and a passive imaging unit (in a single-camera or multi-camera fashion) in the same platform for concurrent acquisition of ranging and imagery data. Different combinations of the most recent technological trends in ranging and imaging are available, including (i) single-photon Lidar (SPL) or linear mode, multi-spectral and topo-bathymetric laser scanners, and (ii) nadir and oblique-looking cameras, equipped with RGB, NIR or hyperspectral sensors.

Hybrid sensor systems

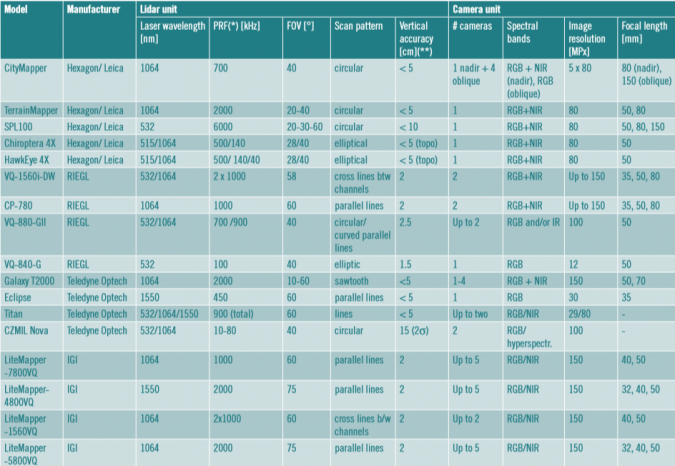

Table 1 provides an overview of the most recent hybrid sensor systems available on the market, including their main technical specifications as stated in the instrument datasheets. As far as the data collection is concerned, simultaneous multi-sensor acquisition from the same platform offers benefits in reducing the total flying time and costs, since all data of interest is collected in one go while already flying. However, there are still some challenges to tackle, such as the proper planning of the optimal conditions for concurrent acquisition of Lidar and images. Both flying-related parameters (e.g. height above ground and strip/image overlap) and environmental ones (e.g. time and season) should be carefully selected in order to find the best compromise for successful image matching and laser scanning.

(For a more readible version of the table, see here.)

Hybrid processing workflows

Image-based 3D reconstruction based on dense image matching, as facilitated by the SURE software by nFrames, is already a standard in the industry to produce dense 3D point clouds, digital surface models, true orthophotos and 3D meshes. It particularly benefits from the high availability of aerial image data, low acquisition costs, fine resolution of detail and availability of high-resolution multispectral information. If additional Lidar data is available and well co-registered, these results can be improved by the complementary sensor behaviour. Dense surface generation from imagery is particularly strong on detail and edges due to the high resolution defined by the pixel ground resolution. Meanwhile, Lidar technology is strong in its ability to retrieve low noise samples consistently with homogenous precision due to the reliable depth measurement of the active laser beam. This is particularly beneficial in the presence of poor texture, such as strong shadows or large white surfaces, where the passive texture matching is limited by the ability of the camera to resolve texture. Here, Lidar data can support the surface generation by additional depth measurements for better precision and completeness. Furthermore, polar measurements are helpful in case of small yards and very narrow streets, where the laser beam can occasionally reach the ground, while DIM reconstruction is often prevented by stereo-occlusions. Lastly, forestry applications additionally benefit from multiple returns and full waveform information of Lidar data. By integrating both data sources, this high completeness and reliability can support the high-resolution result from DIM, which delivers high fidelity along edges and other discontinuities, fine surface detail and particularly multispectral colour information.

Integration challenge

The main challenge in integrating Lidar and DIM data consistently lies in proper consideration of their high variations in resolution and precision. In aerial applications, the dense image matching point cloud is typically of higher density and lower depth precision than the Lidar data when captured at high altitude, e.g. from fixed-wing aircraft. This is due to the resolution limitation of the Lidar beam divergence and repetition time on the one hand, and the availability of high-resolution large-frame cameras on the other. Furthermore, the variations of the local point-cloud precision for dense image matching can be high, particularly as the point precision depends not only on the ground resolution but also on the texture quality. Additionally, the intersection geometry of the stereo models has a strong impact as a quadratic function of the depth – which is particularly relevant for oblique imaging, where the depth variation is significant. Consequently, the depth fusion needs to be adapted to the precision of each point from both sensor systems. Within the nFrames SURE software, these photogrammetric precision values for each individual point are estimated and used during the fusion process.

Hybrid 3D geospatial products

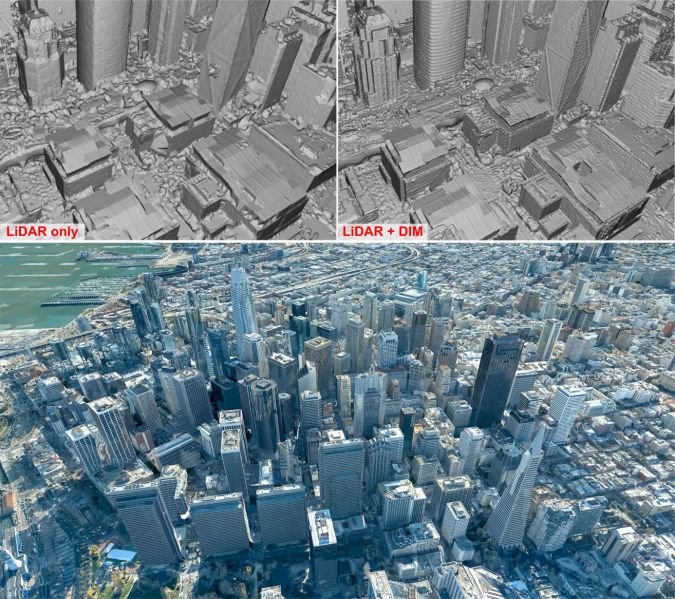

Two of the most prominent differences when comparing Lidar and DIM data as recorded by most hybrid sensor systems are the discrepancy in the achieved point density and the ability to retrieve points in narrow street canyons. This becomes especially obvious when looking at a dataset like San Francisco by Geomni (Figure 1), which was captured with the Leica CityMapper airborne hybrid sensor. It combines tall buildings in the downtown area with a relatively low image overlap of 60% forward and 30% sideways, as well as a ground sampling distance of approximately 5cm in the imagery. When it comes to dense image matching, resolving points in San Francisco’s narrow street canyons can be problematic, since tall buildings act as occluders in the aerial imagery, while image observations on the building tops can also be limited when the image overlap is low like in this dataset. In areas of the dataset where enough redundancy and a good geometric configuration of image rays are available (for example, on façades of buildings), dense image matching produces very detailed results due to the low ground sampling distance of the dataset and the oblique views of the buildings offered by Leica CityMapper’s five camera heads. As an active polar measurement system, Lidar is capable of measuring points in street canyons where dense image matching lacks the required redundancy. At the same time, however, the spatial detail achieved by Lidar is relatively low (Figure 1, top left). By combining the data from both sensors, it is possible to produce a 3D mesh of San Francisco that features both the detail from dense image matching and the completeness and low noise levels from Lidar (Figure 1, top right). Figure 1, bottom, shows an overview of the 3D mesh produced by a hybrid surface generation approach and supplemented with RGB texture from the multi-view imagery.

Detailed reconstruction

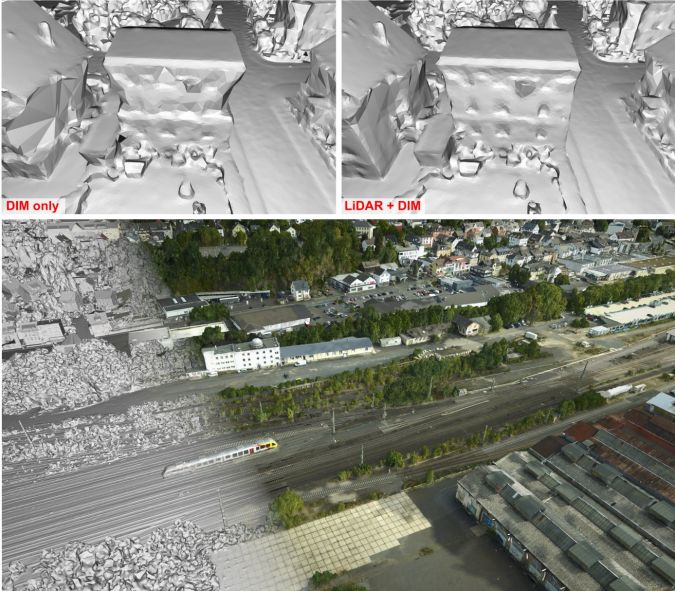

The hybrid dataset collected for Betzdorf, Germany, (Figure 2) poses different challenges than San Francisco. It was captured by the airborne IGI LiteMapper-4800 which combines a RIEGL VQ480i with a 100MP nadir-looking camera, and includes imagery featuring a ground sampling distance of approximately 2cm and an overlap of 80% forward and 60% sideways. As visible in Figure 2 (top left), the missing oblique views can lead to data gaps in dense image matching, especially below overhanging structures of houses and close to the dataset boundaries where image redundancy is decreased. Thanks to the built-in Lidar scanner, however, this restriction can mostly be resolved in the integrated results (Figure 2, top right). An overview of the 3D mesh produced by combining Lidar and DIM data is given in Figure 2 (bottom). This also shows the level of detail with which the railway system is reconstructed. Here, Lidar and dense image matching data complement one another to allow reconstruction of both the railway itself and the poles alongside the railway.

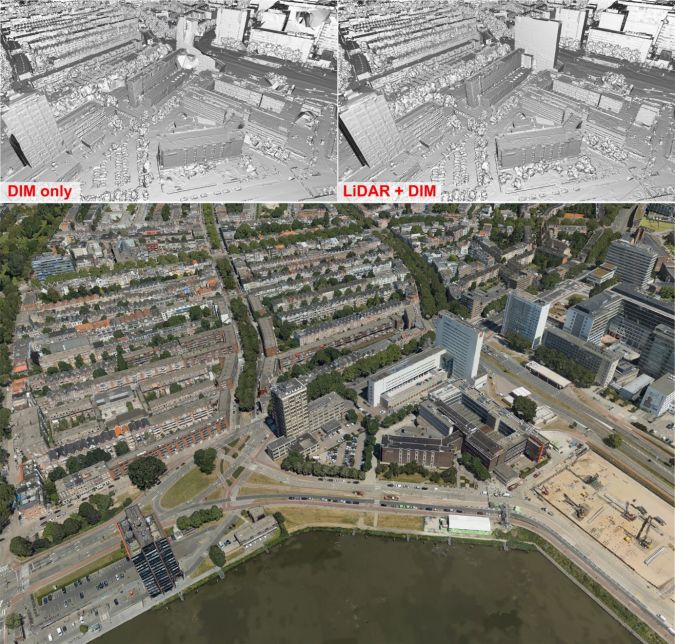

Even though most datasets nowadays are captured at 80% forward overlap due to the significant resulting benefits of completeness and edge sharpness, the overlaps can still be limited for some applications, e.g. due to camera repetition rate or flying height. The Rotterdam dataset (Figure 3) is an example of such a dataset. Captured using the Leica CityMapper flown at a relatively low altitude, it achieves an overlap of approximately 60% forward and 40% sideways and a very good ground sampling distance of approximately 3cm. As in San Francisco, such circumstances can result in data gaps arising during dense image matching – not only in street canyons, but also towards the top of tall structures (Figure 3, top left), where the image redundancy is further reduced due to the shorter distance between sensor and reconstructed object. As visible in Figure 3 (top right), integrating Lidar can greatly help to alleviate such issues while retaining the geometric detail of dense image matching throughout the rest of the dataset.

Conclusion

This article has provided an update on the latest developments in the field of airborne hybrid systems, i.e. airborne mapping systems combining Lidar and camera (or multi-camera) sensors on the same airborne platform. Besides presenting the most recent hybrid solutions available on the market, it discussed the need for integrated processing of the concurrently acquired ranging and imaging data. It will be necessary to adopt a new perspective, one which looks beyond the traditional data processing chains and extends them towards a hybrid data processing concept. Integrated approaches for sensor orientation and surface generation are cornerstones for success in this context, both of which rely on a deep understanding of the different properties of active and passive 3D imaging and of the uncertainty components in their measurements. The first solutions to address these issues are promising, as demonstrated by the 3D results achieved by combining Lidar and DIM data. They have clear potential to take the airborne mapping sector a step forward in terms of product completeness and geometric quality, object detection and processing efficiency.

Acknowledgements

Thanks to the contributing sensor vendors for their technical consulting and data sharing to support this research. A special thanks to George Halley from Geomni/Verisk, Jens Kremer from IGI and Bart Beers from Cyclomedia for providing publishing rights for the examples included in this article.

Further reading

Glira, P., Pfeifer, N., and Mandlburger, G., 2019. Hybrid orientation of airborne Lidar point clouds and aerial images. In: ISPRS Ann. Photogram., Remote Sens. & Spatial Inf. Sci., Vol. IV-2/W5, pp. 567-574.

Mandlburger, G., Wenzel, K., Spitzer, A., Haala, N., Glira, P. and Pfeifer, N., 2017. Improved topographic models via concurrent airborne Lidar and dense image matching. In: ISPRS Ann. Photogram., Remote Sens. & Spatial Inf. Sci., Vol. IV-2/W4, pp. 259-266.

Rothermel, M., Wenzel, K., Fritsch, D. and Haala, N., 2012. SURE: Photogrammetric surface reconstruction from imagery. In: Proceedings LC3D Workshop, Vol. 8, pp. 2-8.

Toschi, I., Remondino, F., Rothe, R. and Klimek, K., 2018. Combining airborne oblique and Lidar sensors: investigation and new perspectives. In: ISPRS Int. Archives of Photogram., Remote Sens. & Spatial Inf. Sci., Vol. XLII-1, pp. 437-444.

Value staying current with geomatics?

Stay on the map with our expertly curated newsletters.

We provide educational insights, industry updates, and inspiring stories to help you learn, grow, and reach your full potential in your field. Don't miss out - subscribe today and ensure you're always informed, educated, and inspired.

Choose your newsletter(s)