Visualizing point clouds in game engines

The use of dense geospatial point clouds in virtual reality

How can the dense point clouds that originate from 3D mapping be turned into useful game engine scenes? This article considers issues including point classification and segmentation, geometric accuracy and point colourization for VR applications.

Direct utilization of dense, coloured point clouds in a virtual world is motivated by many reasons. The point clouds themselves are efficient to produce with 3D mapping methods. The immersive visualization offered by new VR techniques is well suited for geometrically complex datasets, such as indoor point clouds. With the development of computer hardware solutions and game engines that are compatible with point clouds, point cloud visualization in VR has become increasingly attainable – and therefore relevant for geomatics as well.

Thanks to the continuing advancement of 3D mapping technology, the efficiency of obtaining 3D point clouds has never been higher. Coloured point clouds can be obtained using both survey-grade systems, such as vehicle-mounted mobile laser scanning, and consumer technologies, such as Lidar sensors in mobile devices. Simultaneous localization and mapping (SLAM) systems have made 3D mapping possible indoors in addition to outdoors. Contemporary indoor SLAM systems can operate in geometrically complex environments with high mapping efficiency. Beyond laser scanning systems, the ongoing development of photogrammetric software has not only brought more advanced professional tools to the market, but also introduced more user-friendly open-source software for photogrammetric 3D reconstruction. This has been especially significant in the use of unmanned aerial vehicles (UAVs or ‘drones’) for 3D mapping; after all, the camera remains their most important sensor payload. Nowadays, even consumer-grade mini-UAVs feature gyro-stabilized high-resolution cameras.

In consumer technology, depth cameras and, more recently, Lidar sensors have been integrated into handheld devices, allowing near-real-time 3D reconstruction. They are also used in 3D scanners and autonomous robotic devices. Thanks to the combination of these developments, most of the environments encountered in everyday life can now be digitized in three dimensions.

From mapping to immersive experiences

Consequently, the challenge in the use of 3D sensing technologies is shifting from data acquisition to data utilization. As the point clouds remain the lowest common denominator in 3D sensing, they are the most attractive type of data to apply. Solutions that can utilize point clouds are compatible with a wide variety of sensing technologies. The simplest form of application is the visualization of point clouds. On a desktop computer and conventional monitor, this can be performed by a multitude of commercially available and open-source software solutions. Likewise, the browser-based visualization of point clouds is enabled by a number of openly available Javascript libraries and online services.

Over the past decade, virtual reality (VR) visualization using head-mounted display devices (HMDs) has matured into a consumer technology. Devices are available from multiple manufacturers, but the business ecosystem for releasing applications is converging around a few major players. In a similar manner, the development tools and open standards such as OpenXR that can support a multitude of VR HMDs have emerged, simplifying the development of applications. For building interactive VR applications and experiences beyond mere data visualization, commercial game engines are the de-facto standard environment. Here, the VR community benefits from the huge pool of developers that can transfer their experience of – and enthusiasm for – game development to commercial game engines. For a game developer, moving into VR is relatively easy and well documented.

Virtual reality and point clouds

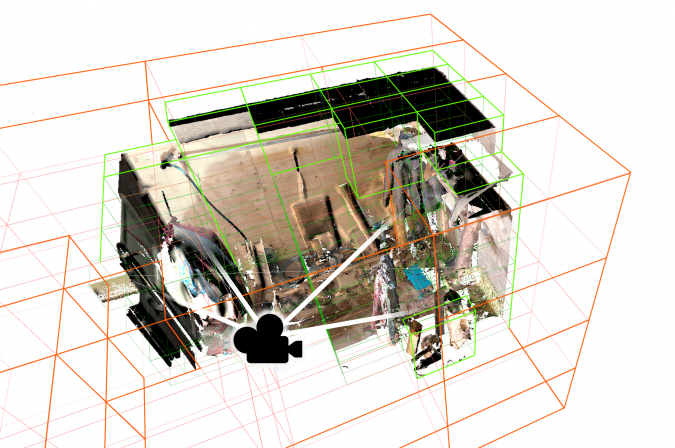

As most VR apps for use with HMDs are built using game engines, it is important that those game engines can support point clouds. Fortunately, point clouds have become directly available as data types in many game engines via built-in features or available plugins. However, point cloud visualization in VR is further complicated by the strict performance requirements for rendering, especially the high required frame rate. In an interactive visualization studied via an ordinary monitor, low frame rates are annoying at most. In VR, the reduced rendering speed causes a mismatch between the rendered image and the human sensory system. This results in discomfort for the user and in extreme cases can lead to actual physical symptoms such as nausea.

The VR visualization of point clouds requires highly efficient systems for spatial indexing, level-of-detail handling and rejection of not visible points from rendering (culling). These systems allow the engine to determine which parts of the point cloud are actually seen by the virtual camera and to only render those with a sufficient level of detail. Further, the rendering has to operate so that fewer points are finalized in rendering from more distant parts of the cloud, with minimal processing. The total amount of points to be rendered can be kept within limits by combining the spatial indexing with culling and multiple levels of detail (determined by the distance) and by adjusting the size of rasterized points.

As VR visualization also inherently provides a first-person view, there are commonly potential see-through effects and issues arising from density variations, especially with unstructured indoor point clouds. Therefore, the dynamic point size adjustment has to be implemented. In a game engine, this is connected to the method used to actually draw the points. Most commonly, the points can either be drawn as sets of pixels directly or via geometric objects (such as squares or circles) that are used in place of the points and rendered via the engine’s conventional triangle rendering pipeline.

Dynamic assets enable rich interaction

The rich interaction between the virtual environment and the user is often a key driver in the interest in game engine visualization. Conventional game engine scenes are built from a set of mesh models, cameras, lights, actors, etc. With dynamic, scripted interactions, these turn the scene from static rendering into an interactive environment. To minimize the use of computational resources, virtual scenes are typically assembled so that only the objects with which users can interact will respond to simulated collisions, gravity and more sophisticated lighting models. Simplified geometries such as primitive shapes and convex hulls are used to simulate collisions and detect overlaps.

If the use of point clouds is to go beyond simple visualization, the point cloud also has to be made compatible with the features commonly used in game engine applications, such as real-time lighting, simulated collisions and interactive objects. Here, the lighting is mostly a rendering issue, connected with the visualization system. However, realizing separate objects also places requirements on the point clouds themselves.

Turning dense point clouds into useful game engine scenes therefore requires the point clouds to be separated into interactable objects and coupled with their simplified versions to allow dynamic simulations. At the same time, the indexing and rendering solutions that are used have to be compatible with objects that move within the scene.

Conclusion

In research literature and software development, a number of solutions have been presented for turning the dense point clouds that originate from 3D mapping into useful game engine scenes. Many game-like properties, such as interactable objects and dynamic lighting, have already been accomplished with point clouds. However, a number of topical research questions still remain. For point clouds to be applied in game engines, it is not enough to classify the points into simplistic object types such as buildings or vegetation. Producing interactive game engine scenes requires a more refined, object-wise segmentation and semantic understanding. More research and more sophisticated solutions are still need relating to the classification and segmentation of objects in point clouds.

In the existing research, much of the assessment of the quality of point clouds obtained using different 3D mapping techniques has mostly focused on their geometric accuracy. However, VR applications also require sufficiently good point colourization to be achieved as a part of 3D sensing. This places emphasis on the radiometric quality of point clouds as this plays a significant part in their visualization.

From a computational perspective, textured mesh models are currently the most effective way of representing geometry in game engine visualization. However, the use of point clouds holds many potential benefits, such as simplified data integration from different mapping systems and improved efficiency in digitizing real-life environments. Further, VR offers a natural way of exploring geometrically complex datasets with full six degrees of freedom, navigating them immersively and potentially even interacting with the points. Therefore, experimentation with the VR visualization of point clouds remains topical for the geomatics community.

Further reading

Virtanen, J. P., Daniel, S., Turppa, T., Zhu, L., Julin, A., Hyyppä, H., & Hyyppä, J. (2020). Interactive dense point clouds in a game engine. ISPRS Journal of Photogrammetry and Remote Sensing, 163, 375-389. https://doi.org/10.1016/j.isprsjprs.2020.03.007

Virtanen, J. P., Julin, A., Handolin, H., Rantanen, T., Maksimainen, M., Hyyppä, J., & Hyyppä, H. (2020). Interactive Geoinformation in Virtual Reality – Observations and Future Challenges. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 44(4/W1). https://doi.org/10.5194/isprs-archives-XLIV-4-W1-2020-159-2020

Julin, A., Kurkela, M., Rantanen, T., Virtanen, J. P., Maksimainen, M., Kukko, A., ... & Hyyppä, H. (2020). Evaluating the Quality of TLS Point Cloud Colorization. Remote Sensing, 12(17), 2748. https://doi.org/10.3390/rs12172748

Value staying current with geomatics?

Stay on the map with our expertly curated newsletters.

We provide educational insights, industry updates, and inspiring stories to help you learn, grow, and reach your full potential in your field. Don't miss out - subscribe today and ensure you're always informed, educated, and inspired.

Choose your newsletter(s)