The era of smart manufacturing: why you need a good data strategy

As we enter the era of smart manufacturing, the reliability of data is growing in significance and data standardization is becoming increasingly important. According to JP Kelly, associate director and head of manufacturing at Murphy Geospatial, businesses need a good data strategy to succeed.

We are entering the era of smart manufacturing, where we can automate and simulate updates to processes and facilities virtually. The rapid adoption of automation, driven by a significant exodus of workers from the manufacturing sector over the past decade, will prompt substantial efforts to establish smart factories. Digital twins operate as virtual replicas of real-world systems, made up of geospatial data, and are used to enhance manufacturing systems and products.

Artificial intelligence (AI) tools are now being integrated into digital twins, making these data-hungry systems even hungrier as they rely heavily on the large volumes of ‘big data’ coming from sensors and Internet of Things (IoT) devices that mirror physical objects or systems in real time. The sheer volume of data required for digital twins is experiencing exponential growth. Simultaneously, technology’s capacity to capture data is expanding at an incredibly rapid pace. As AI increasingly enhances the capabilities of digital twins, the reliability of data is growing in significance and data standardization is becoming more and more important.

The modern vision of a manufacturing ecosystem – where the IoT and sensors offer real-time insights and intuitive digital twins bridge the gap between physical and digital realms – holds promises of enhanced efficiency, waste reduction, error elimination and prolonged asset longevity. However, achieving this future way of working means manufacturers need a clear data strategy that outlines stringent measures for data standardization and governance. As data volumes grow, maintaining visibility into the location and content of information becomes increasingly challenging. While big data is indispensable for insights, challenges arise when organizations lack an accurate understanding of their stored data. The escalating data volume poses a risk of reduced control when it comes to safeguarding the data.

Is it good or bad data? Separating the wheat from the chaff

In manufacturing, the implications of being able to identify good and bad data cannot be overstated. Bad data, characterized by inaccuracies, incompleteness, inaccessibility, inconsistency or untimeliness, poses a significant danger to the entire production process. Similarly, faulty information hampers the ability to gain a comprehensive understanding of a manufacturing facility, hindering strategic planning and timely responses to challenges.

Many players in the manufacturing sector rely on complex supply chains comprising a diverse selection of sites and stakeholders. Disparate stakeholders and the need for quick and concurrent decision-making can exacerbate the impact of poor data. Trusting inaccurate or incomplete information can lead to misunderstandings about project status, which can have a knock-on effect and cause mistakes with widespread consequences.

Good data, however, is fundamental to the success of integrating AI tools and the use of digital twins. Good data enables informed decision-making, reduces inaccuracies and ensures the completeness and consistency of data throughout an entire asset lifecycle. Without good-quality data, you cannot create an intuitive digital twin and therefore a truly smart factory.

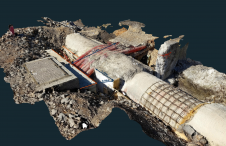

So how do you know the difference? It’s all about how the data was collected, how it was labelled, and how it is managed. It starts with an accurate digital record of an asset, via forensic data capture and the process of Scan-to-BIM (building information modelling). Then, implementing a geospatial management plan allows the strategic handling and analysis of spatial data using technologies like GIS, AR and VR to support informed decision-making, planning and resource management of the asset.

AI and autonomous workflows

Autonomous workflows have been proven to offer strong competitive advantage, time savings and improved business processes. But with AI making decisions based on the data, it has been fed, it’s easy to see why the accuracy of data included in the many layers of the digital systems matters so much. Inaccurate data can result in AI recommending unnecessary manufacturing processes. This not only wastes valuable time, but also incurs additional costs. While the immediate benefits of having accurate data within the architecture of a digital twin might not be apparent in small-scale projects, the true value unfolds over time.

Value staying current with geomatics?

Stay on the map with our expertly curated newsletters.

We provide educational insights, industry updates, and inspiring stories to help you learn, grow, and reach your full potential in your field. Don't miss out - subscribe today and ensure you're always informed, educated, and inspired.

Choose your newsletter(s)