Digital twins: a comprehensive solution or hopeful vision?

Reflecting on the challenges in the geospatial domain

The concept of the digital twin has been gaining significant momentum in the geospatial domain since 2016. Although there is some consensus on the basic features of a digital twin, some critical reflection is still necessary to make the concept tangible. Only then can the digital twin be implemented as a technical solution that scales to real-world problems rather than being an abstract vision for partial solutions. In this article, the authors reflect on the practical digital twin-related challenges in the geospatial domain.

The concept of a digital twin (DT) originated in the manufacturing industry, where a DT is used as an exact virtual copy of a product with the aim to control and improve it. For this – besides being a virtual copy of the past, current and future states of its physical counterpart – the DT should contain a self-evaluation functionality based on continuous updates and provide an interaction mechanism to evaluate what-if scenarios. In the geospatial domain, the first articles on DTs were published in 2012. The concept has been gaining significant momentum since then, and specifically since 2016, with 80% of all existing papers on DTs having been published since that year (Bernd et al., 2020).

Defining a digital twin

Despite the increased use of the term DT, there is no common definition in use and, consequently, the term is used in inconsistent ways: as a digital replica of an asset in a BIM context, as a 3D city model, as a digital representation of the physical environment including its dynamic processes, as geospatial information infrastructure, etc. Even though a single definition of a DT in the geospatial domain is lacking, there is consensus that it should be based on 3D city models, containing objects with geometric and semantic information; it should contain real-time sensor data; and it should integrate a variety of analyses and simulations to be able to make the best design, planning and intervention decisions. To support those decisions, all information should be presented to users (citizens, decision-makers, experts) in a user-friendly visualization of the DT in a one-stop-shop dashboard.

Beyond these consensus features, critical reflection on DT as a concept is still necessary to make it tangible. This is required to implement the DT as a technical solution that scales to real-world problems, as opposed to only an abstract vision that justifies focusing only on partial solutions.

Exact mirror concept

The exact mirror concept of a DT originates from the manufacturing industry, where it defines exact digital replicas of objects in a closed system, like a single product (e.g. a car) or a production line. But this exact mirror concept does not work for the complex reality in the geospatial context. Like all other kinds of models, geospatial models are necessarily an abstraction of reality to provide insight into a specific phenomenon (e.g. geology, erosion, energy consumption, noise pollution, etc.). This abstraction is needed, since it is impossible to geospatially model our world 1:1 in content, scale, detail and time, and it is necessary to limit the computation and load time of spatial analysis. Therefore, any geospatial representation of reality is a model at a specific level of detail at a specific time. This is already common practice in 3D city modelling, which has widely adopted the notion of level of detail (LoD).

Various examples of DTs embrace this concept of abstraction to model relevant objects in 3D in a standardized manner over large areas. One such example, which incorporates the LoD notion, is the 3D Baseregister Addresses and Buildings (BAG) in the Netherlands. This viewing and downloading service contains 3D models at multiple LoDs for all 10 million buildings in the country. It is automatically built and regularly updated by the 3D Geoinformation Research Group at TU Delft, and the data is used in many urban applications (see Figure 1). Another good example of a national digital twin at a certain abstraction level in 3D with a high degree of accuracy is the landscape model of Switzerland and Liechtenstein, where the natural and manmade objects that shape the landscape – such as roads, buildings, bridges, cable cars, forests, individual trees and water bodies – are maintained for various purposes (see Figure 2). Both examples are available as open data.

There are also other reasons why the exact mirror concept is not adequate for geospatial representations within DTs. The concept implies a single virtual representation of reality. However, every application requires its own virtual view as a selection of reality. Therefore, the one-size-fits-all property as utopically assigned to DTs does not suffice. Instead, a synchronized ‘litter of twins’ is needed. For example, in building information modelling (BIM), a simple house easily consists of more than a thousand structural components, all modelled as volumes, whereas in a geospatial information system (GIS) it is one object, modelled with measurable/observable surfaces. These different representations serve different applications best, and it has proven to be impossible to adequately serve both BIM and GIS applications with exactly the same model.

Real-time and dynamic information

Real-time and dynamic information, as well as continuous updates, are other recognized key properties of a DT. In the case of geospatial representations of reality, however, data acquisition every few weeks or even months is considered to be highly frequential. Is it realistic to aim for an exact mirror of reality (beyond project sites), which requires continuously synchronizing with the physical twin? Or is it more realistic to strive for a lower dynamic basis for modelled objects covering only significant changes, with higher dynamic information used only for sensor data (noise, air quality, etc.)? Furthermore, what is needed is a cost-effective approach that captures and models changes useful for the scope of the model rather than capturing all real-time information. Another issue regarding temporal data is how to capture versioned data of the changing reality, which can be used to monitor trends, and at the same time, to return to a past state on which certain decisions were based.

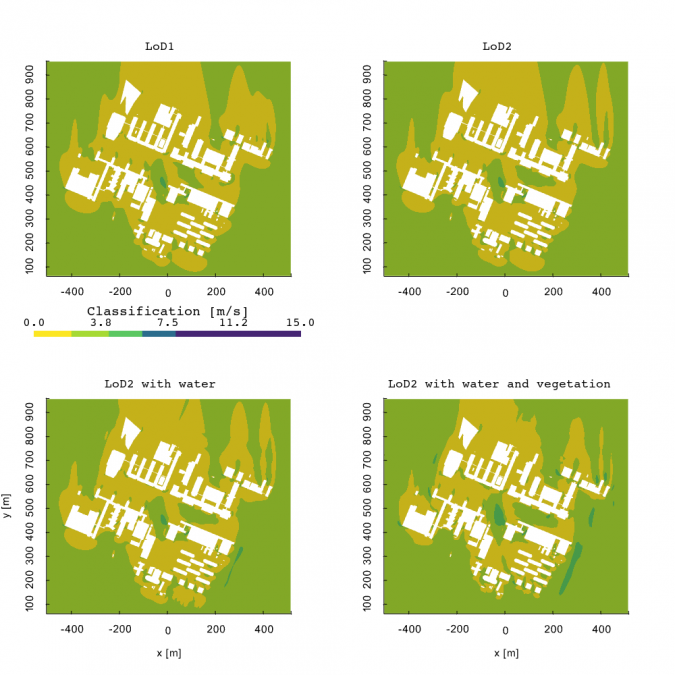

Simulations versus predictions

Simulations are also widely considered to be part of the requirements of DTs to serve the one-stop-shop dashboard with outcomes from what-if scenarios for several indicators, i.e. energy, noise, wind (Figure 3), mobility, etc. To make this possible, simulations need to run on geodata from the same source. However, in practice, only a few types of analysis can be done directly on geospatial data as available. Instead, most simulations are based on mathematical models. Those models usually need specific data structures, objects modelled according to specific guidelines, high-end hardware and specific software environments. One potential solution is to have global simplified analyses in an integrated environment and more detailed simulations in specialized environments. The challenge is then how to implement workflows from the same base data to different simulation environments and to feed the simulation results back to one dashboard.

Another issue with simulating the complex environment is the inherent uncertainty. Simulations as part of DTs are often incorrectly confused with predictions of reality. Many (unknown) variables are involved in such an open and complex system that cannot be accounted for, e.g. current wind comfort simulations are limited to simplified geometry and often do not include vegetation. Therefore, simulations at best provide insights into specific phenomena, e.g. noise levels that are produced by a specific source or wind flows based on dominant wind directions, and as such they provide recommendations optimized for only a specific criterion; they cannot predict the exact values of all criteria at all points in time. How can the uncertainty (which is not the same as accuracy) of simulations be presented in the one-stop-shop dashboard, and how can the uncertainty aspect of simulations be communicated to the non-expert users of DTs who will make decisions based on the outcomes?

Reality-based versus realistic-looking visualizations

DTs are supposed to offer user-friendly visualizations to reach a wider public beyond just geospatial professionals. Non-expert users may expect that the more realistic a 3D city model looks, the better. But realistic-looking models require more detail and are therefore more expensive to acquire. Often only limited height-related data is available openly, and therefore basic block models are the best 3D models that can be generated for a large portion of the Earth. Such models already offer great benefits for spatial analysis in 3D opposed to traditional 2D approaches and are therefore good DT candidates (see Figure 4).

In addition, realistic-looking models are not necessarily closer to reality. They can be outdated, contain errors and be less geometrically and semantically accurate than less realistic-looking models. Also, several studies have shown that higher levels of detail do not always lead to better analysis.

In addition, renderings of future designs (as artist’s impressions) often show a sunny, green, clean and not very crowded (i.e. optimistic) scenario, whereas the reality is often less idyllic. Considering this from the DT perspective, the question is how one can prevent the overvaluation of a realistic ‘look & feel’ by non-experienced users and the undervaluation of the data quality? How can reality-based visualizations be provided rather than just realistic-looking visualizations which may raise false expectations? This can be compared to the traditional smoothing of contour lines which was applied to make contour lines look closer to reality. However, geospatial experts know that the smoothing is an adjustment of the underlying observation data and therefore further from reality than the original sharper lines.

Sharing data and standardization

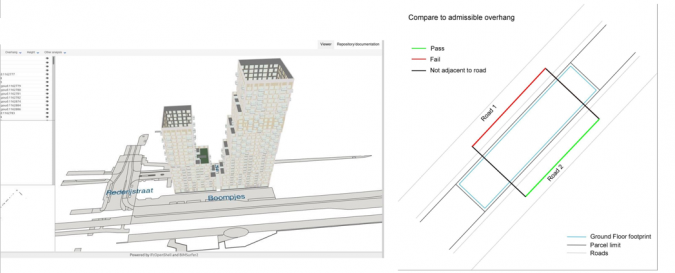

Sharing data across organizations and sectors is still a challenge in the geospatial data domain, and this becomes more prominent if even more data (and simulation models) need to be shared and synchronized as promised by a DT. The persisting issue of interoperability still exists: how can standards be developed that integrate data from different domains at different levels of detail and different temporal resolutions? This requires not only the harmonization and alignment of standards on a conceptual level, but also standards that work in practice – ones that constrain the flexibility that current standards (such as CityGML and IFC) provide to allow only one way to represent the semantics and geometries of a specific feature, as well as high-quality conversions. Such standardization solutions would support GeoBIM applications such as building permit workflows, where a design can be automatically checked against planning rules and its surrounding environment (see Figure 5).

Other issues regarding sharing data also still exist, such as security, privacy, accountability, ethics and responsibility. In the geospatial domain, agreement frameworks are in place but these operate in government-dominant contexts where sharing data is promoted. There are other challenges when data of private companies – with commercial business models – is involved, as with the DT concept.

Practice-readiness

A crucial yet still often overlooked challenge is the practice-readiness of data, software, standards, etc., to solve real-world problems with DT solutions. There have been many pilots reported in publications that show the potential of DTs. But a one-stop-shop dashboard does not yet exist, and research on the fundamentals of DT implementations is rare. Therefore, it is still unknown how a DT can best operate in practice and how it will do everything promised in pilots, not only for one simulation and one virtual representation at one time, but at full scale and also into the (far) future. Real-world implementations must also cover many exceptions and sophisticated information infrastructure, both of which are often not part of showcases and pilots.

For DTs in practice, the scope should not be limited to cities that already have implemented advanced smart city solutions. In fact, the vast majority of cities and municipalities are less far along the digitalization transformation growth path and do not have the in-house capacity to develop fundamental solutions on their own. Down-to-earth, incremental approaches are therefore needed to support realistic growth paths that can be adjusted along the way.

Lastly, a successful DT implementation in practice requires looking beyond technical solutions. Such implementations also require massive financial investments in digital twin infrastructure; governance and coordination; agreement frameworks and collaboration between many different stakeholders and processes within governments and across sectors; changes in workflows; new skills for employees, etc.

Conclusion

‘Digital twin’ is a catch-all term that has come to be used in many different ways in the geospatial domain since its introduction a few years ago. Without a common understanding of what the concept entails (and what is new to it), DTs can end up being treated as unproductive goals on their own, rather than the best means to support current challenges such as climate adaptation and urbanization. There is also a risk of non-directed, fragmented solutions with unrealistic promises, which can fail to implement DTs as a real-world solution beyond pilots and projects. For a practice-ready implementation, the concept needs a critical reflection addressing persistent challenges from the geospatial domain. However, as outlined above, the DT concept does offer great potential. Firstly, a DT is a striking metaphor adopted by a wider community that unquestionably shows the relevance and added value of geospatial technology. In addition, the geospatial domain has a lot to offer to DT implementations. The sector has been studying similar issues (in different contexts, such as GIS, SDI, 3D city models, GeoBIM, smart cities, IoT, etc.) since long before the term DT was introduced. Challenges such as the accurate and up-to-date spatial representation of reality, the standardized integration of geodata from highly heterogeneous and dynamic sources, and the development of a geospatial data infrastructure for multiple purposes are not automatically solved by introducing yet another metaphor or concept. By all means, we should embrace the DT concept, introduce the additional innovations and address the associated challenges. But for a full-scale implementation that works for any city, organization or country, we need to work on the less visible, and maybe therefore less rewarding, challenges. These are the unique knowledge, skills and expertise that the geospatial domain can bring to make digital twin solutions become reality.

Acknowledgements

This research has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 Research & Innovation Programme (grant agreement no. 677312, ‘Urban Modelling in Higher Dimensions’) and Marie Sklodowska-Curie (grant agreement no. 707404, ‘Multisource Spatial data Integration for Smart City Applications’).

Further reading

Ketzler, Bernd; Naserentin, Vasilis; Latino, Fabio; Zangelidis, Christopher; Thuvander, Liane; Logg, Anders (2020): Digital Twins for Cities: A State of the Art Review. Built Environment 46(4), 547-573.

Biljecki, Filip (2020): Exploration of open data in Southeast Asia to generate 3D building models. ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences VI-4/W1-2020, 37–44.

Clara García-Sánchez, Stelios Vitalis, Ivan Pađen and Jantien Stoter (2021), The impact of level of detail in 3D city models for CFD-based wind flow simulations, 3D Geoinfo, 11-14 October 2021, ISPRS Archives.

Value staying current with geomatics?

Stay on the map with our expertly curated newsletters.

We provide educational insights, industry updates, and inspiring stories to help you learn, grow, and reach your full potential in your field. Don't miss out - subscribe today and ensure you're always informed, educated, and inspired.

Choose your newsletter(s)