UAV Remote Sensing for Smart Agriculture

Different Data Collection Sensors for a Range of Applications

‘Smart agriculture’, i.e. the efficient use of Earth's resources to feed the world, requires accurate and advanced data acquisition, modelling and management. UAVs can play a critical role in this.

As the world population continues to rise, the demand for food is also increasing. At the same time, a series of global and local challenges are threatening ‘food security’. In this context, various technologies and techniques have been proposed and considered in recent decades to secure the efficient usage of the planet’s agricultural resources, i.e. ‘smart agriculture’. This requires the accurate and advanced acquisition, modelling and management of relevant data. This article presents a brief discussion of how unmanned aerial vehicles (UAVs or ‘drones’) can play a critical role in smart agriculture, including a focus on their capabilities and applications.

What is Smart Agriculture?

Smart agriculture is defined as the application of modern technologies to increase crop yields qualitatively and qualitatively and to improve farming management. It is also known as ‘precision agriculture’ or ‘AgriTech’ in the business world. Smart agriculture is aimed at reducing the costs and risks involved in growing crops, while minimizing the inputs in terms of irrigation, fertilizers, herbicides, pesticides, etc. A major aim of smart agriculture is to efficiently fit between available resources and requirements based on the observed temporal and spatial inconsistency in the crop field.

Smart agriculture brings together a variety of advanced and evolving technologies and applications, including UAVs and satellite remote sensing, sensor networks based on the Internet of Things (IoT), digital-based prediction for insects and diseases, mechanized irrigation, light and heat control, soil management and other associated analytical tasks. Amongst all those technologies, Earth observation (EO) plays an important role in all aspects of pre-diagnosis, modelling and prevention.

As one of the most rapidly advancing aerial EO technologies, UAVs offer advantages over both traditional field measurement techniques and satellite image analysis techniques. A significant amount of time and effort is needed to produce high-quality data from field measurements. In contrast, UAVs offer several advantages:

- Enabling faster, more flexible and more convenient responses to crop-related problems

- Mapping larger crop fields compared to traditional field measurements

- Facilitating Efficient data collection by using different sensors

- Operating even on cloudy days

- Capturing crop information in higher spatial and temporal resolution

- Permitting accurate derived vegetation maps that support better decision-making.

Furthermore, UAV images have several advantages over images gained from satellites or manned aerial photogrammetry:

- Lower impact of clouds due to lower flight height

- Large volume of data captured in a short time

- Full control by the user

- Less affected by the atmosphere

- Lower operating costs.

The Use of UAVs in Smart Agriculture

Nowadays, UAVs equipped with an imaging sensor are playing an important role in digital smart agriculture. This technique can be a key element driving improvements in agricultural precision, increases in crop productivity and a greater focus on targeted crop protection.

UAVs can be equipped with different data collection sensors, such as RGB, multispectral (MS), hyperspectral or thermal cameras, or Lidar. Plants reflect light at varying levels depending on their chlorophyll content and biomass. A UAV with a multi or hyperspectral camera measures the reflected energy received from the sun in different areas of the spectrum. The level of energy reflected back from the vegetation and the relation between different spectral bands can enable the health situation of the crop to be indexed. There are various vegetation indexes, including the well-known Normalized Difference Vegetation Index (NDVI) which is based on using the near-infrared (NIR) band. The NDVI is normally used to indicate leaf coverage and plant health, with high NDVI values indicating a high concentration level of biomass. Hence, the NDVI and other vegetation index maps represent great potential for increasing agricultural food production in a more sustainable manner.

Consequently, spectral data captured using a UAV can be analysed to assess whether the imaged crop is fresh or dry and to localize any areas showing early signs of infection by pests or diseases. Moreover, this spectral information – when combined with 3D information from sources such as digital surface models (DSMs) and waterways maps – can be used to improve the efficiency of activities such as irrigation or pesticide treatment while reducing the amount of inputs required. Figure 1 summarizes the use of UAVs for smart agriculture and shows the connection between sensors, vegetation indexes and applications as outlined below.

Vegetation Indexes and Measurements

Several spectral vegetation indexes have been proposed and defined to measure plant properties. The invisible specifications can be converted into visible indexes and measurements by producing appropriate colour maps or indicators. Examples of such indexes include:

- Leaf Area Index (LAI): This is defined as the projection of the one-sided green leaf area per unit of ground area. The LAI is an important index of crop growth status. It can be calculated using ground-based methods or remote-sensing Current estimation methods using optical remote sensingare classified into three types: experimental relationships between the LAI and vegetation indices like NDVI, canopy reflectance models inversion, or a mix of both these methods.

- Normalized Difference Vegetation Index(NDVI): This is widely used in smart agriculture to assess the spatial variation of the expected LAI. The NDVI is normally used to indicate leaf coverage and plant health, with high NDVI values representing a higher concentration level of biomass.

- Triangular Greenness Index (TGI): This is based on using RGB images by relying on reflectance values in the visible portion of the spectrum to indicate the chlorophyll content in areas of high leaf cover.

- Visible Atmospherically Resistant Index (VARI): This index is derived from RGB images for leaf coverage. The VARI index is used to estimate the vegetation elements with low sensitivity to the atmosphere.

- Above Ground Biomass (AGB): This comprises all biomass in living plants above the soil including stems, branches, seeds, foliage, etc. Since above-ground biomass is mostly visible in the carbon pools (such as the oceans), the AGB represents an important indicator of changes or benefits related to carbon mitigation. Accordingly, the AGB is a significant index for monitoring greenhouse gas emissions and other related projects.

- Fractional vegetation cover (FVC): This index includes the ground layer (such as grasses), the mid-layer (such as mid-sized trees) and the canopy layer. The NDVI values of the bare soil reflectance (NDVIs) and the full vegetation reflectance (NDVIv) can be used to determine the FVC.

- Green area index (GAI): This indicates all the green features of a plant, including the leaves, stems and reproductive organs. The complementary Brown Area Index (BAI) refers to non-green areas in the canopy. A generic index known as the Plant Area Index (PAI) indicates both green and non-green parts.

Common Sensors for Smart Agriculture

There are several types of sensors that are commonly used in UAV remote-sensing applications in the agricultural and forestry sectors. They can mainly be classified as follows:

Optical RGB cameras: RGB sensors are the most widely used sensors on UAV systems for smart agriculture. They can take high-resolution images and are normally less expensive than the other types. In addition to being lightweight and easy to use, they are also easily accessible. The RGB images can be captured in a wider range of weather situations, including on sunny and cloudy days, providing that certain camera parameters (shutter speed, ISO, etc.) are set up correctly based on the weather conditions to prevent underexposure or overexposure of the image. The fundamental drawback of these sensors is their lower spectral resolution, which makes them inadequate for measuring vegetation-related indices that necessitate spectral data from the non-visible part of the spectrum. Hence, RGB sensors are typically used alongside other types of sensors.

Multispectral and hyperspectral cameras: Spectral data can be very helpful in assessing several biological and physical characteristics of crops. Multispectral or hyperspectral imaging sensors benefit from higher spectral resolution specifications. Images captured by UAVs fitted with these sensors can provide information about the vegetation’s spectral absorption and reflection in different bands. Diseased crops can be spotted early since chlorophyll absorbs visible light in the red channel while strongly reflecting NIR light. The information in the NIR channel can therefore be used to identify such incidences of disease, even if they are not yet visible in the red channel. Technological improvements in sensor production have resulted in lower-weight multispectral sensors which can even be carried on today’s commercial lightweight UAVs. MicaSense RedEdge and Parrot Sequoia are two examples of such sensors that are able to capture a wider range of wavelengths including NIR. Using such cameras, it is possible to derive the NDVI and some of the other abovementioned vegetation indexes. Figure 2 shows the region of the spectrum that is covered by each of the two cameras. Hyperspectral sensors have also been improved to generate lighter-weight sensors specifically for drones. These cameras cover a larger range of the spectrum than multispectral sensors but they normally achieve lower spatial resolution. HSC-2 is a sensor that is commonly used for vegetation classification and monitoring. This lightweight hyperspectral camera generates up to a thousand spectral bands in the VIS-VNIR spectral range which is adjustable in the range of 400-1,000nm.

Thermal cameras: When they are warmer than absolute zero, all objects emit infrared light at specific wavelengths proportional to their temperature. Thermal cameras concentrate on and pick up radiation at certain wavelengths, which is subsequently transformed into a grayscale image to represent heat. Some thermal sensors can interpret the received energy and transform it into the absolute temperature of the object. In this case, images can be displayed in colours, with hotter objects normally indicated by red shades and colder ones by blue. Thermal UAV remote sensing has been successfully used in agriculture for plant health inspection based on the assumption that fresh vegetation is normally less dry in lower temperatures. Moreover, these images can be subjected to further inspection and processing to extract information that can help with irrigation mapping, fertilizer/pesticide management and yield estimation. Additionally, thermal imaging can support the assessment of a plant’s maturity development and can identify crop damage from harmful events such as fires and suchlike. FLIR is one of the main camera brands actively being applied in this context, offering the three main wavelength ranges of short-wave, mid-wave and long-wave infrared (Figure 3).

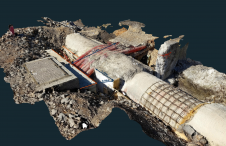

Lidar: Currently, the 3D point cloud is essential for assessing plant height, crop phenology, water flow and more. A more reliable estimation of these parameters can be achieved when a high-density point cloud is available. A UAV equipped with Lidar is the preferable method of acquiring a dense point cloud due to Lidar’s significant accuracy and the ability to receive multiple returns. Biomass is traditionally measured by quadrats that were not defoliated by cutting or grazing during the growing season. Although the data is accurate, this traditional method of AGB field sampling is time-consuming, costly and laborious. Moreover, collecting AGB data is difficult in larger areas. Lidar data from UAVs provides a unique opportunity to measure 3D vertical structures. For example, canopy height measurements rely on the laser pulse return from the vegetation and ground in multiple reflections and at different elevations. In recent years, digital terrain models (DTMs) and DSMs have been produced from Lidar data as the basis for monitoring plant structures using UAVs and creating canopy height models (CHMs). To determine the CHM, the DSM is compared at different times during the growing season, with the initial DTM computed using the first measurements. Methods utilizing Lidar have also begun to appear in order to estimate the PAI based on canopy density. The fundamental benefit of these Lidar techniques is their sensitivity to both green and non-green plant components.

Use of Remote-sensing UAVs for Smart Agriculture

The data collected using a UAV equipped with Lidar or imaging cameras can be used for multiple agricultural planning and monitoring purposes, including:

- Crop health monitoring

- Vegetation and soil dryness evaluation

- Fertilizer requirements assessment

- Crop damage and disease detection

- Crop growth observation and harvesting optimization

- Water flow mapping and irrigation management

- Erosion and soil loss monitoring

- Creation of slope and sunlight exposure area maps.

A number of applications are highlighted in more detail below.

Irrigation management: One key use of UAV technology in smart agriculture is crop irrigation management, in which the effectiveness of water utilization is increased by applying the optimal amount of the resource in the optimal location and at the optimal time. UAVs with appropriate sensor types can be used to locate areas of a crop that require additional watering. This has a positive effect on overall crop production. The thermal camera is one of the most commonly used sensors for this application. By employing thermal imaging to map the variability of water potential in a field, irrigation can be optimized. UAV thermal imaging may be necessary to identify problems such as malfunctioning irrigation systems and uneven irrigation. The use of UAV thermal imaging makes it possible to detect heat stress before the plant suffers permanent harm, or to spot irrigation problems before the symptoms are visibly noticeable. In vineyards, for example, UAV-based irrigation is being used in smart agriculture to enhance water distribution within the vineyard, to aid in achieving uniform maturity and hence to enhance the product quality.

Crop insurance: Global challenges like global warming and more extreme rainfall throughout the year are increasingly resulting in flooding, causing crops to perish due to a lack of oxygen to the roots and a lack of sunlight on the foliage. In such cases, farmers may often submit insurance claims for the damage that has occurred. Before the claim can be honoured, an insurance loss inspector must conduct a crop damage evaluation. Traditionally, these have been challenging to perform and are frequently erroneous because the work is mostly done manually. UAVs hold potential for crop damage assessments since they enable images to be captured over a wide coverage area, thus reducing the time and cost involved, while improving the inspection accuracy and supporting efficient documentation.

Weed mapping: Weed mapping is an important application of UAV-based smart agriculture. Weeds are unwanted plants that develop in crops and can lead to several issues. They negatively influence plant growth because they intensify the fight for limited resources such as water or space to grow. Spraying the crop field with herbicides is the conventional method of tackling or controlling weeds. However, excessive herbicide usage can lead to herbicide-resistant weeds, besides having an impact on crop development and productivity. Additionally, it seriously endangers the environment through pollution, not to mention significantly increasing the cost price. An alternative to this is site-specific weed management (SSWM), in which herbicides are applied in a spatially variable manner rather than being sprayed over the entire field. This requires the creation of an accurate weed cover map that allows for targeted herbicide application based on UAV imaging. Task weed maps or zone maps show the soil types, topography or pest maps. When machine learning techniques are applied to UAV imagery, it is possible to identify weeds and crops automatically based on their shape, structure and contrast. Herbicides are then applied specifically to the weeds only, which is better for the crop and also has less of an environmental impact.

Crop damage detection: UAVs can be used to create 3D digital maps of the crop and to assess a variety of factors including crop height and the LAI. Furthermore, UAV-based crop imaging data can be analysed by data processing tools to spot changes in plant biomass and health. As a result, infections may be identified in the early stages, allowing farmers to take action to minimize losses. Generally, UAVs play a role by gathering crop health-relevant data to detect a potential infection in the initial stage of infection, before visual indications appear. Moreover, UAVs can be used during the treatment of infections when farmers can use them for targeted spraying as well as for precisely tracking the progress of the intervention.

Fertilizer on crops: The most popular fertilizer in the world is nitrogen; it is a vital component of chlorophyll, which is key to plant survival. Controlling the quantity of fertilizer used for crops benefits both the plants and the budget. Accordingly, many farmers nowadays utilize UAVs and smart agriculture techniques to acquire information and map their crop fields in order to provide plants with the fertilizers they require while using less nitrogen overall. The GAI is the most commonly used index to calculate when and where nitrogen application is required. Normally, the resulting crop map is colour-coded according to the GAI values, with lower GAI values indicating a greater need for nitrogen.

Flooded crop area inspection: For insurance loss evaluations, a UAV can be flown over the regions that have been damaged to gather data using RGB or MS cameras. The data can be processed and displayed using the appropriate cutting-edge technologies to calculate crop loss. To analyse the crop damage and provide a report of the findings for the farmer, the loss inspector should first build a series of visualizations, similar to an orthomosaic map. The loss inspector may also compute the NDVI, which is effective at spotting vegetation and bare soil, to determine the degree of damage. After developing the categorized NDVI map that displays the bare soil patches in the crop field, the inspector can use the map to identify which areas of the field require closer assessment of the damage level.

Conclusion

This article outlines the emergence of UAV technology for smart agriculture in various applications related to crop monitoring and management, and highlights the benefits of using UAVs in this sector compared to field and satellite-based techniques. Various vegetation indexes are briefly explained, including their links to the types of sensors used on UAVs, such as multispectral, thermal, RGB cameras and Lidar.

Value staying current with geomatics?

Stay on the map with our expertly curated newsletters.

We provide educational insights, industry updates, and inspiring stories to help you learn, grow, and reach your full potential in your field. Don't miss out - subscribe today and ensure you're always informed, educated, and inspired.

Choose your newsletter(s)